Dockerization of Node.JS Applications on Amazon Elastic Containers

Dockerization of NodeJS Applications on Amazon Elastic Containers

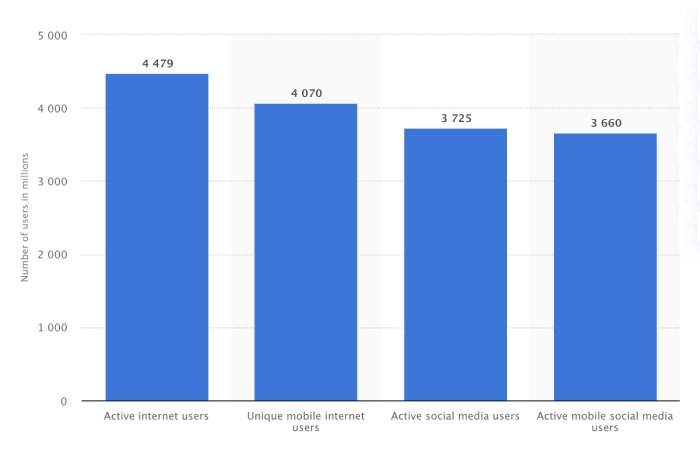

Number of users in millions

The Internet has grown exponentially within the past decade. As of October 2019, according to statistics, 4.48 billion people around the world were active internet users. This phenomenon demands highly scalable services that are fail-free and consume the minimum of resources when running because all things in the cloud come at a cost. This article is about creating a critical task-based highly available and scalable NodeJS application on the Amazon Cloud with an architecture that minimizes its cost.

At the end of this article you should have learned about:

- Dockerization of a Local Node Application

- Building and Uploading a Docker Image

- Running a Docker Container Locally

- Dockerization of a Node Application on AWS

- Creating Elastic Container Registries

- Clustering on ECS and Running it as a Service

Prerequisites

To keep things productive, the initial installation of the following will be required. Almost all of those listed below are automated installers, therefore this shouldn’t be a hassle.

- Node and NPM

- AWS CLI

- Docker

NodeJS Boilerplate Application

Let’s start by cloning the boilerplate code, which I have written for a NodeJS Application

https://github.com/th3n00bc0d3r/NodeJS-Boilerplate

1 2 3 4 5 | git clone https://github.com/th3n00bc0d3r/NodeJS-Boilerplate cd NodeJS-Boilerplate npm i node app.js Listening: 3000 |

The boilerplate code will give a base web server using express.js listening at port 3000 for two requests.

- An HTTP response request at http://localhost:3000/

- An HTTP Get request with a JSON Response at http://localhost/:3000/test

Dockerizing the Boilerplate Application

Docker is an open-source software giving you the ability to create an environment inside a container that makes sure all dependencies of your application are packed together for it to run flawlessly. This ensures that the container, which becomes a container image, runs independently of any hardware specifications or platform restrictions, which, in turn, might run well on a Linux machine, but when executed on a Windows machine, fail miserably due to its library and environment path dependencies. It also solves the problem of sharing a code with someone where, in most cases, the environments of both computers are set differently.

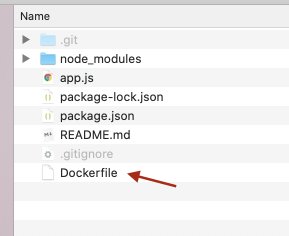

The Dockerfile

A dockerfile is a set of instructions that assist docker to configure and create a container image of your application. To create a docker file just go into the boilerplate application directory and create a file by the name of Dockerfile – case sensitive.

1 | touch Dockerfile |

Dockerfile

Contents of our Dockerfile

1 2 3 4 5 6 7 | FROM node:6-alpine RUN mkdir -p /usr/src/app WORKDIR /usr/src/app COPY . . RUN npm install EXPOSE 3000 CMD [ "node", "app.js" ] |

Let’s go through those lines one by one for clarity of the structure of the dockerfile.

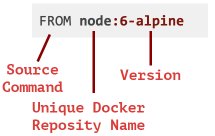

1 | FROM node:6-alpine |

All dockerfiles start with the very first line of the file which is known as the base image. A base image is a starting point for your docker container. A base image can be a stripped-down version of Ubuntu or CentOS so that your application can have a dedicated environment. The docker base image acts as layers, and you can pour one layer on top of another and when it compiles, the compiled docker image itself can be used as a base image for some other application. Docker has a Docker Hub which is a cloud-based repository, a collection of distributed container images created by users like you and me. You can explore and access Docker Hub at http://hub.docker.com/

We are using our base image from the following URL: https://hub.docker.com/_/node/

To translate the first line of our docker file in general terms we can refer to the following image.

Translating the first line

1 | RUN mkdir -p /usr/src/app |

The RUN identifier allows us to execute a command. In this case, we are using the mkdir command to create a directory in /usr/src/app which will complete the code of our NodeJS Boilerplate application.

1 2 3 | WORKDIR /usr/src/app COPY . . RUN npm install |

In this set of code, WORKDIR is setting up an environmental path to be used internally by the docker container image itself. It sets our working directory. Once our working directory is set, we copy our Node Application files to that directory using the COPY command, and then, using the RUN identifier, execute the node_modules initialization process.

1 | EXPOSE 3000 |

The last line is the command for our node application to start. It is a way of telling docker how to run our application.

We are now ready to build over the docker image.

NOTE: Make sure that, at this point, you have the docker running. You should have the docker installed using the installer and then opened it. It should be in your taskbar.

Docker Desktop is up and running

Building the Docker Image

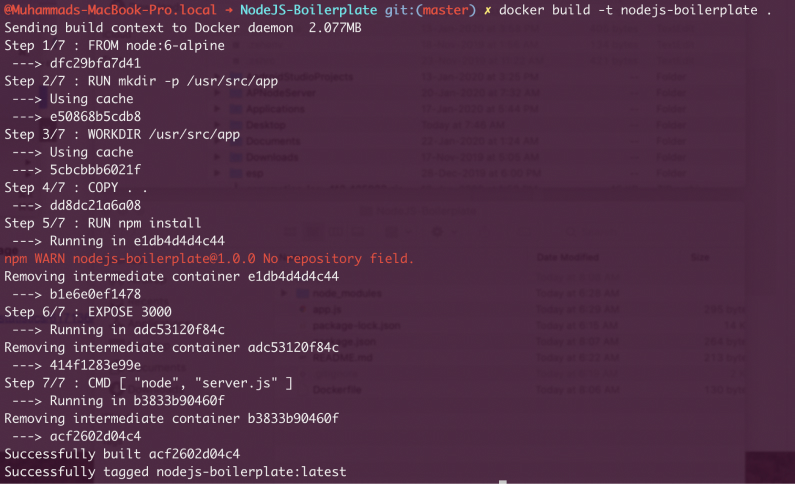

To build a docker image, execute the following command in the NodeJS Application directory.

1 | docker build -t nodejs-boilerplate . |

You should have a similar output:

Creating a docker image

As you can see, the image has been successfully built and tagged. In docker, tagging is like version, and you use tagging to keep track of images when they are being deployed from one environment to another.

Running the Docker Image (Locally)

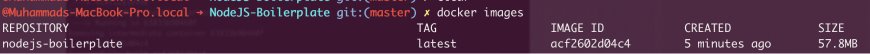

As we have successfully built our first docker image, we can display a list of images that we have built using the following command:

1 | docker images |

Docker images

To generalize the run command for docker:

1 | docker run -p <external port>:<internal port> {image-id} |

Now, run the following command to execute our newly built docker image. Please keep in mind that, in your case, the Image ID will be different. By default, all docker containers do not permit any incoming connections, to fix that we use -p, which publishes all exposed ports to the interfaces.

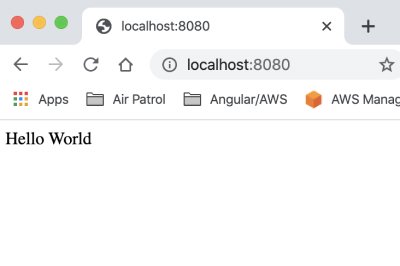

1 | docker run -p 8080:3000 2f356f3dfec9 |

You should have the following output, which states that you have successfully executed a docker container image that you have built on your computer:

Successful output

Now, let’s test it out, open your favorite browser and go to http://localhost:8080/ — you should have a similar screen:

localhost8080

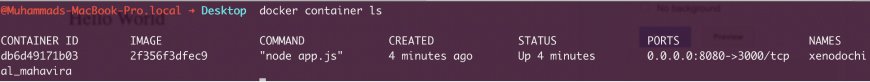

Monitoring a running Docker Container

Let’s monitor our running docker container. To do that, simply type the following command in your terminal:

1 | docker container ls |

docker container ls

Stopping a Docker Container

To stop a docker container, you can use the following command:

1 | docker stop {container-id} |

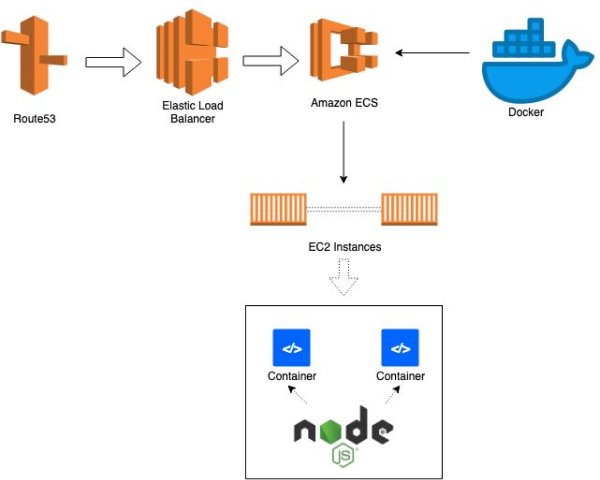

High-Level Overview of Amazon Architecture for Dockerization

High-Level Overview of Amazon Architecture for Dockerization

Amazon Cloud is truly brilliant. I do agree, it does take a bit of cringing to get used to it, but once you get the hang of it, it can do marvels for you. In a nutshell, Amazon ECS (Elastic Container Service) is a service by Amazon that makes it easy for docker containers to be managed. The operations include start, stop, and manage its health. All docker containers are hosted on a cluster and that cluster can consist of more than one EC2 Instances. This makes it highly scalable (based on demand) and very fast.

As we have seen locally, the docker files, in this case, create an image, that is spun into containers. The containers are then published into an ECR (Elastic Container Registry) which is a repository for storing these container images. Once you have the image on AWS, a task definition is created. A task definition is a set of instructions in JSON format for AWS to configure the container images to use, the environment variables to initialize, and the port mapping for networking. The task definition associated itself with a cluster that is created within the AWS ECS control panel. A cluster is the amount of RAM or CPU that is going to be consumed by the container. The scheduler that maintains the number of consistent instances defined by a task definition to keep it running in case of a failure is a service.

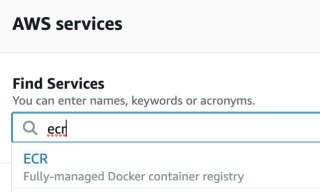

Uploading your Docker Container Image to AWS

Login to your AWS Console and type in ECR and then click on it.

AWS Console

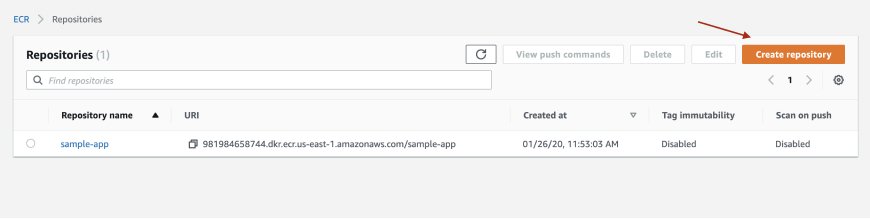

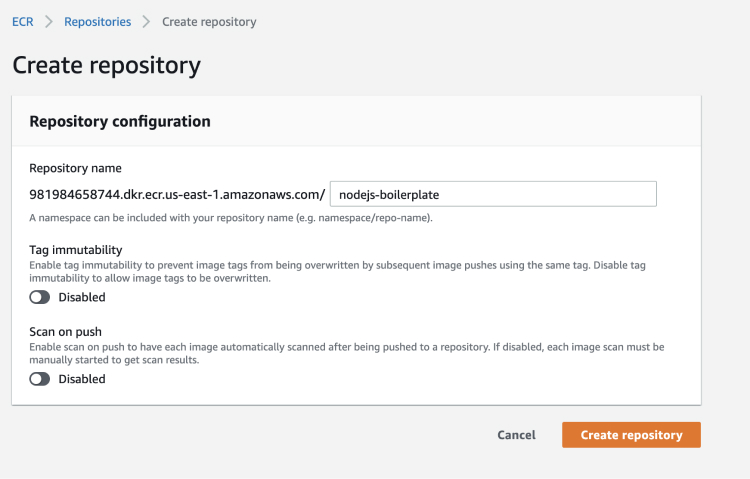

Click on Create Repository

Create Repository

Give your repository a name

Giving a repository a name

You should now have the repository in the management panel:

Repository in the management panel

Note: At this point, you should have your AWS CLI Configured, before moving forward.

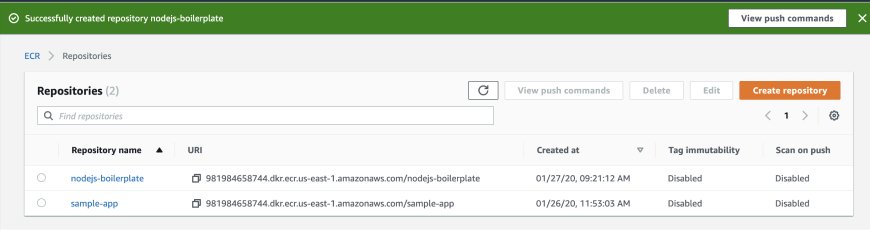

Go to your terminal, and authenticate a user with ECR. To do that, use the following command:

1 | aws ecr get-login --no-include-email --region us-east-1 |

You should now get a key with the complete command. Just copy and paste it again to successfully authenticate into AWS ECR.

Secret Key

1 | docker login -u AWS -p YOURSECRETKEY |

You will now see a login successful message.

Now, we need to tag our image with reference to the image repository we created on ECR:

1 2 3 | docker tag <localimagename>:<tag> <onlineimageuri>:<tag> docker tag nodejs-boilerplate:latest 981984658744.dkr.ecr.us-east-1.amazonaws.com/nodejs-boilerplate:latest |

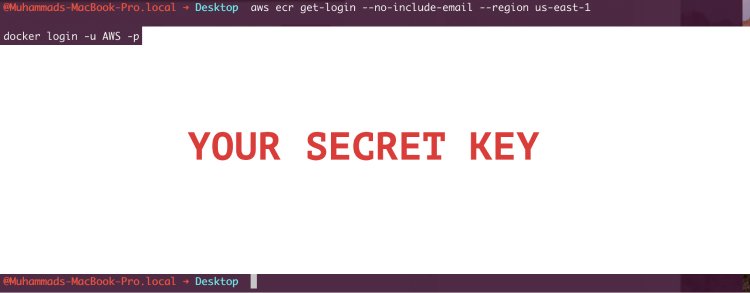

Then, push the image on AWS with the following command:

1 | docker push 981984658744.dkr.ecr.us-east-1.amazonaws.com/nodejs-boilerplate:latest |

You should have a similar output:

Pushing the image on AWS

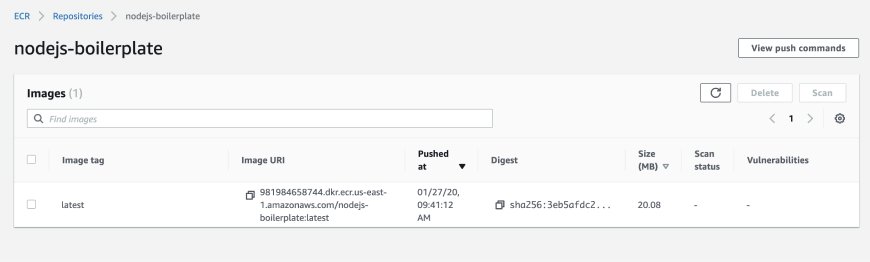

If you check online in the AWS ECR Console, you should have your image up there.

nodejs boilerplate

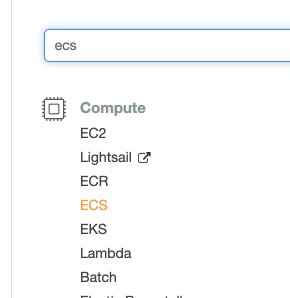

Now, go to ECS, in the Amazon Console:

ECS in the Amazon Console

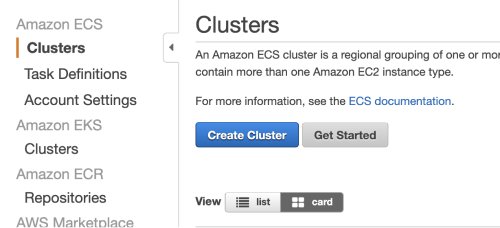

Then, go to Clusters and Create a New Cluster.

Clusters

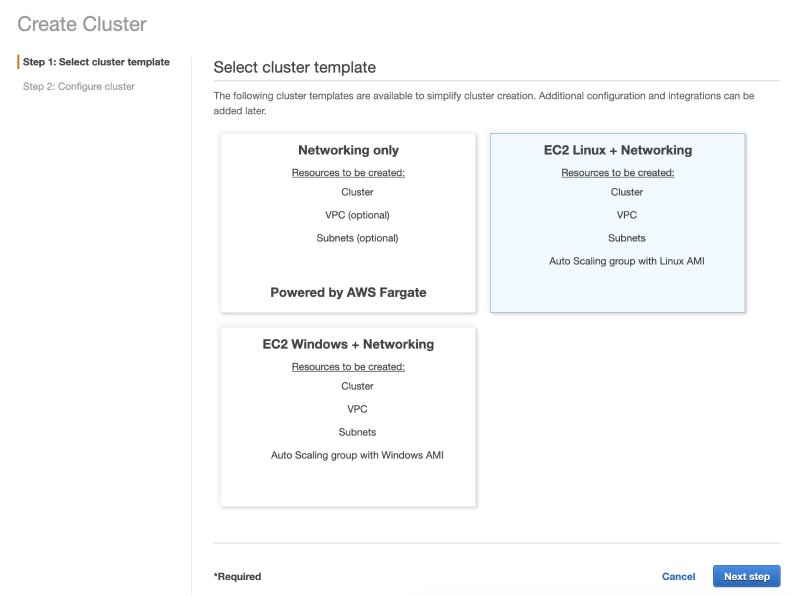

We will be using an EC2 Linux + Networking cluster, which includes the networking component.

Creating clusters

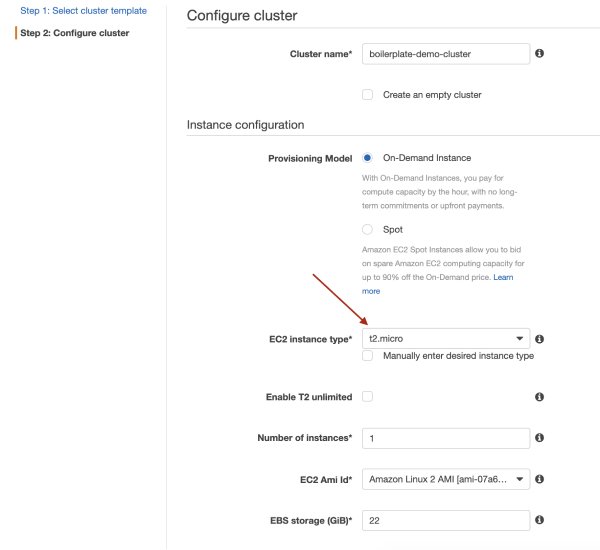

For this configuration, we will be using a t2.micro and give your cluster a name.

Configuring clusters

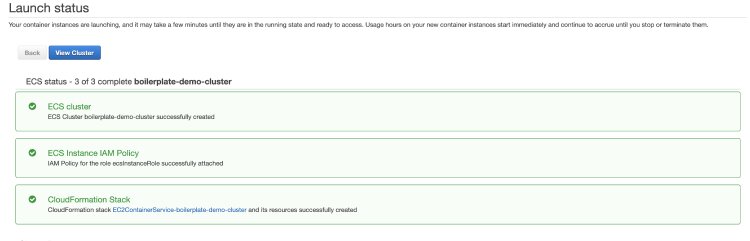

You should have a similar screen as below. Next click on view cluster.

Launch status

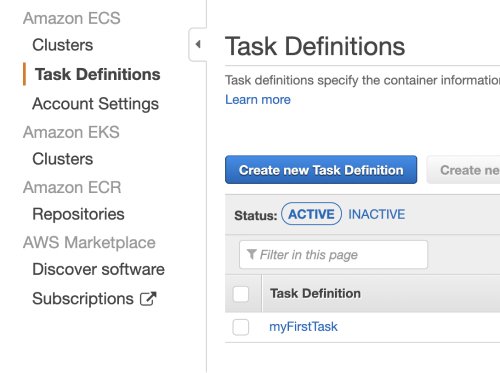

We also need to create a Task Definition, so click on Task Definition, and then — on Create new Task Definition.

Task definitions

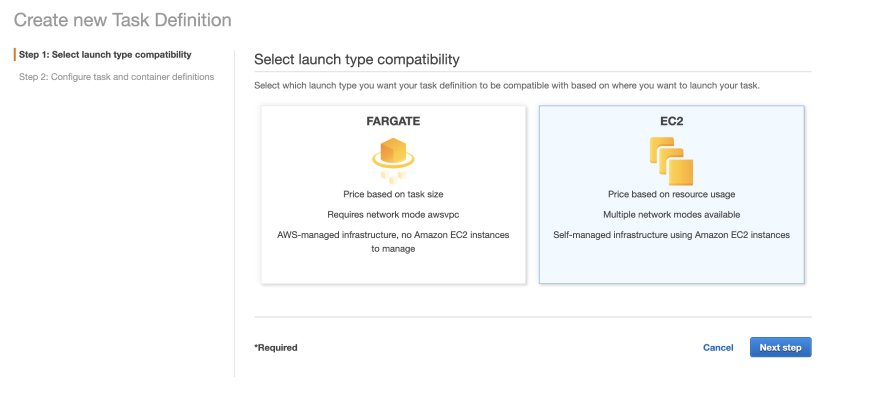

For this, we will be selecting an EC2 Compatibility

Creating a new task definition

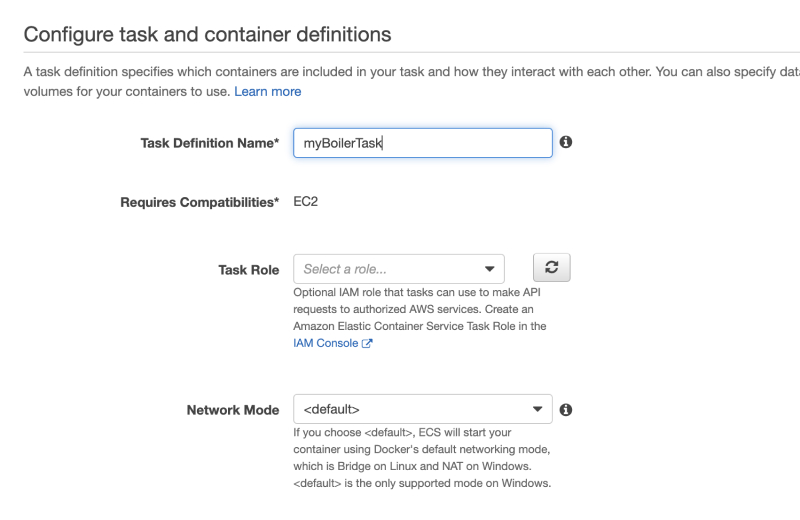

Give your task a name. Scroll down, and click on Add Container.

Configure task and container definitions

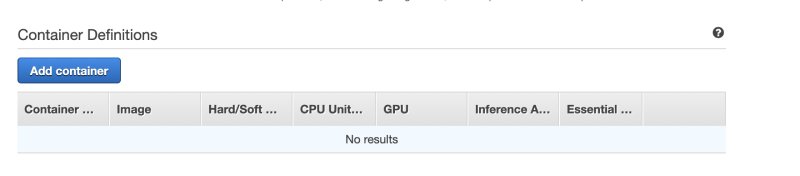

Container definitions

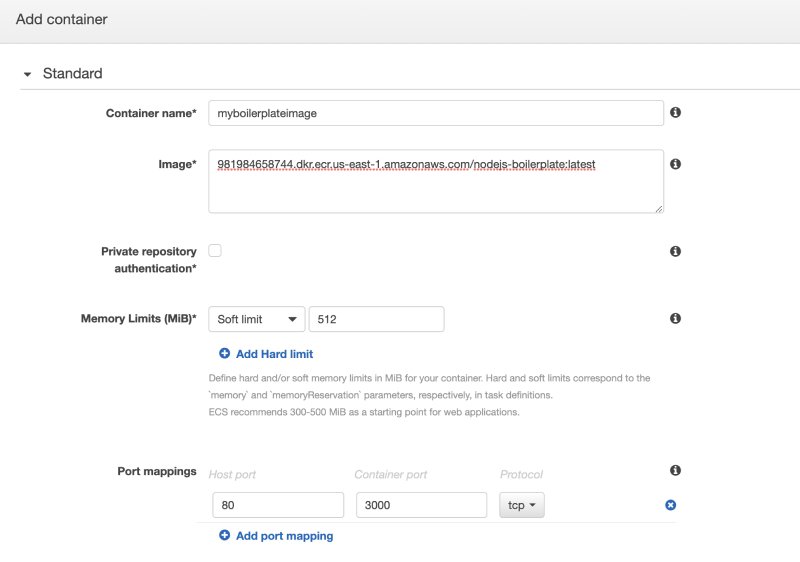

You would now require the URI from the Repository from ECR, make sure you copy it with the tag which is the latest Port mapping. It’s important to tell AWS which ports to export. In this case, we are redirecting all traffic from 80 to port 3000. The port 3000 is of the internal application running inside the container.

Add container

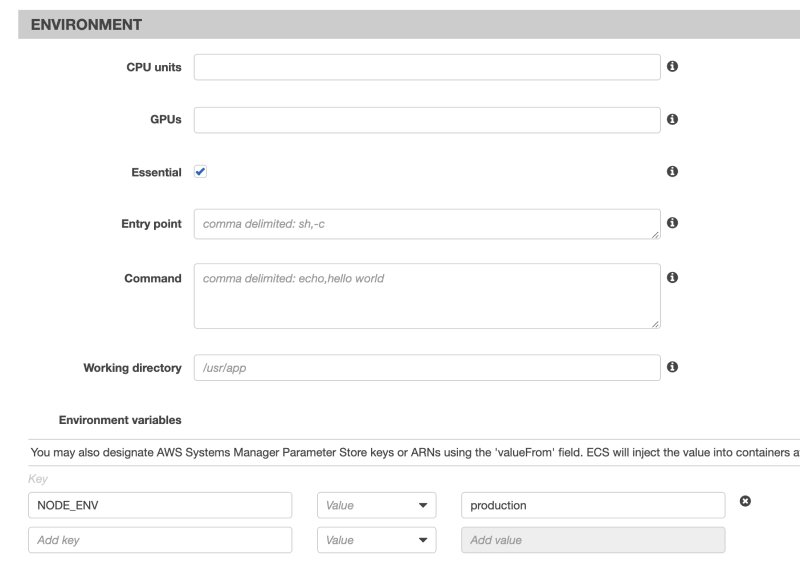

Scroll down and configure the Environment variables. Set them to Production and then click Create.

Environment

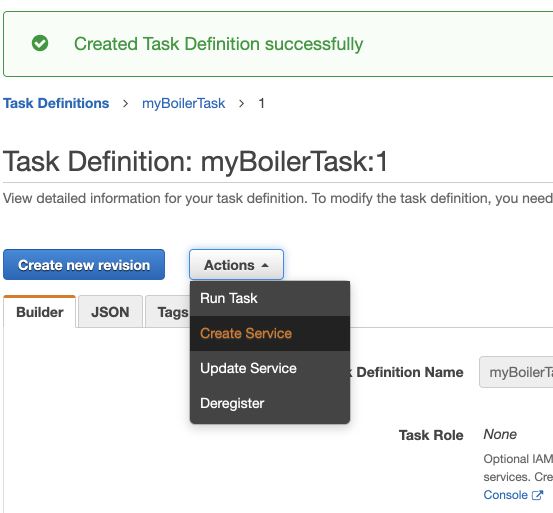

Once the task has been created, we need to create a service for it, therefore click on Actions and select Create Service

Task definitions successfully created

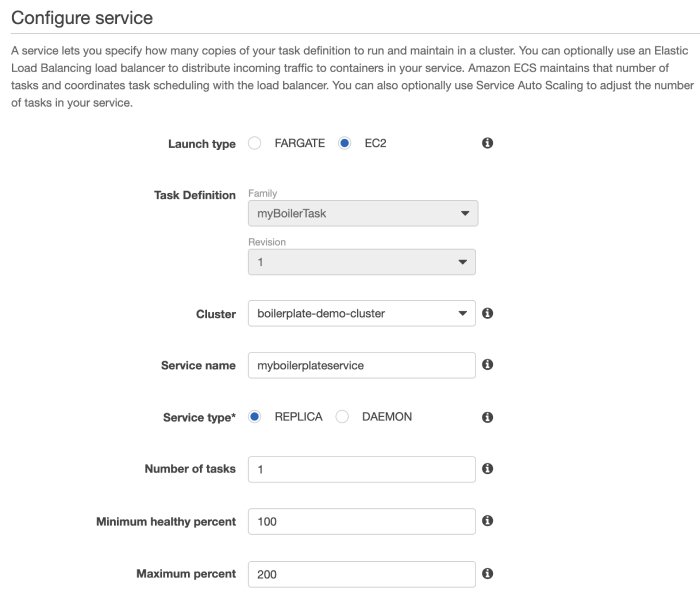

The launch type is EC2 and the number of tasks is 1.

Configure service

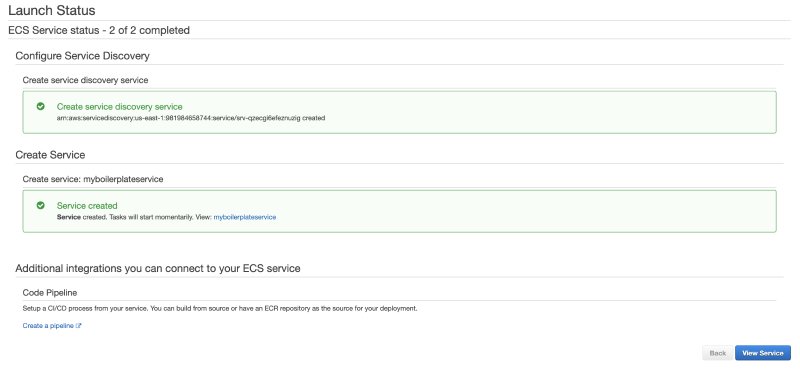

Move forward and create the service. You should have the following success screen:

Launch status 2

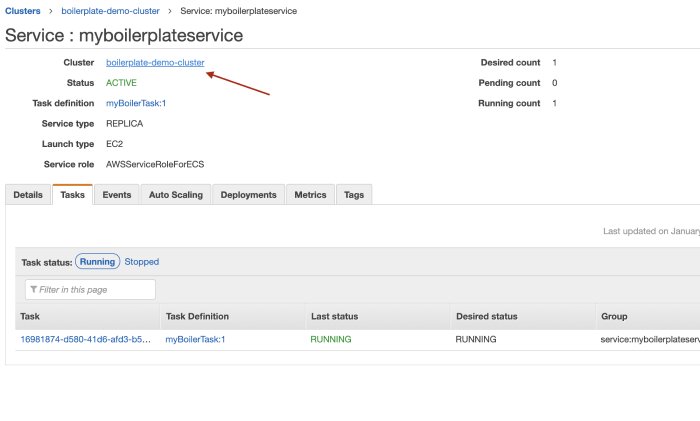

Click on View Service and then on the Cluster

myboilerplateservice

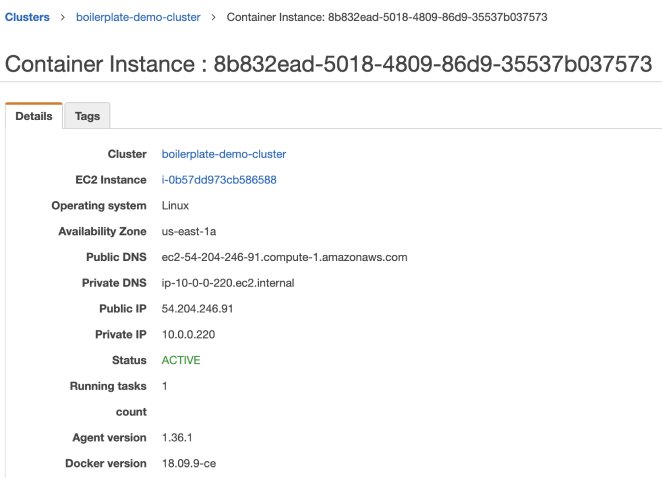

Next, go to the EC2 instances tab and click on the Container Instance.

You should finally have the URL that is public to view your running instance.

Container instance

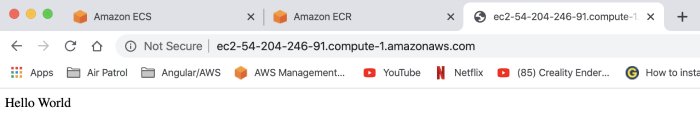

Give yourself a pat if you are now able to see the following screen upon opening the Public DNS:

Opening the Public DNS

Some Key Thoughts

This might seem a bit hectic at the start, but once you make sense of things, I am sure you will have a better hold of the way things are working. This setup is very much preferred for critical tasks that one needs to be dependent in your application. Rather than having a single big application, your application can be broken down into smaller applications that work independently of each other like microservices.

Reference

https://www.statista.com/statistics/617136/digital-population-worldwide/

https://docs.docker.com/engine/reference/builder/#run

https://docs.docker.com/engine/reference/builder/#expose

https://docs.aws.amazon.com/index.html?nc2=h_ql_doc_do

https://docs.aws.amazon.com/AmazonECS/latest/developerguide/Welcome.html

https://docs.aws.amazon.com/AmazonECR/latest/userguide/docker-basics.html

https://www.statista.com/statistics/617136/digital-population-worldwide/

2 comments

Detailed walkthrough, but in the Docker image npm install has multiple drawbacks when used for production images:

installs development dependencies by default

can update your package.lock.json, may not install the same versions

The more optimized and safe command would be to run

npm ci **–**only=production

Thank you for your remarks and the perfect addition to my walkthrough, now this is a model example of positive criticism towards the betterment.

To tell you the truth, it really took a toll on me when discovering things that are just so spread apart and this walkthrough was a collection of my difficulties that I

d wish Id had a guide like this for me to fellow, then why not contribute it back like soo many contributors towards which I applaud my success so far.Your point has been noted.