Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

In the realm of sentiment analysis, there are two primary approaches, supervised and unsupervised learning. Supervised learning means you need a labeled dataset to train a model, while unsupervised learning does not depend on labeled data.

Natural language processing (NLP) is a wide field and sentiment analysis is a part of it. Sentiment analysis identifies and extracts emotions or sentiments from the text. It helps in determining the sentiment or opinion expressed in the text and classifies it as positive, neutral, or negative. I have come across the multiple use cases of Sentiment analysis in various industries such as marketing, customer care, and finance. Sentiment analysis is also a topic of interest for academia. It helps in providing key insights into product preferences by customers, product marketing, and recent trends.

In the realm of sentiment analysis, there are two primary approaches, supervised and unsupervised learning. Supervised learning means you need a labeled dataset to train a model, while unsupervised learning does not depend on labeled data. It makes use of the inherent structure of the data itself. The latter approach is especially useful when labeled data is scarce or expensive to obtain. Text data is widely available on the internet but it is mostly unlabeled. And the labeling of data manually would cost a huge amount of time and money. So in such scenarios, unsupervised sentiment analysis comes to the rescue.

In this article, I introduce you to the two popular unsupervised sentiment analysis algorithms: VADER (Valence Aware Dictionary and sEntiment Reasoner) and Flair. VADER is a rule-based model that uses a sentiment lexicon and grammatical rules to determine the sentiment scores of the text. Flair, on the other hand, employs pre-trained language models and transfer learning to generate contextual string embeddings for sentiment analysis. These two unsupervised methods have their own distinct advantages and limitations, which I will explore in-depth throughout this article.

VADER was presented by C.J. Hutto and Eric Gilbert in their paper. It is a rule-based model which relies on a predefined set of rules and a sentiment lexicon to determine the sentiment scores of a given text. The authors designed VADER in such a way that it can handle the disparities in the text obtained from social media and other internet sources. The raw data often contains emoticons, slang, and acronyms. Its efficiency and adaptability to various text sources make it a popular choice for unsupervised sentiment analysis tasks.

The key components of VADER include a sentiment lexicon and heuristics with grammatical rules. The sentiment lexicon is a list of words, each associated with a sentiment score representing its polarity and intensity. On the other hand, the algorithm uses heuristics and grammatical rules for the context and structure of sentences, such as negations, booster words, and intensifiers. These components work together to calculate an overall sentiment score for a given text, accurately capturing the sentiment expressed in a variety of language constructs.

As a sentiment analysis algorithm, I am always impressed by the unique abilities of VADER. Its efficiency allows me to generate sentiment scores quickly, making it suitable for large-scale applications. The brilliant use of heuristics and grammatical rules enables VADER to effectively handle negation and booster words, providing more accurate sentiment assessments. Additionally, they have designed it to deal with the complexity of social media languages, making it a versatile and adaptable tool for analyzing a wide range of text.

VADER, while useful for sentiment analysis, does have some limitations. Its language dependency means it works primarily with English text. So I can not use it for other languages. Also since it is limited in contextual understanding, it may have some inaccuracies when I feed it complex sentences or domain-specific language. Lastly, VADER faces difficulty in detecting sarcasm and irony, as these forms of expression often rely on subtle cues or context that the rule-based model may not adequately capture.

Let’s use VADER to conduct the sentiment analysis. Please take note that I have used the Kaggle dataset in the example code. You may either download it from this page or just execute the code on the Kaggle platform as I do.

First things first, let me import the dataset into a Pandas data frame so that I can further process it.

import pandas as pd

import numpy as np

data = pd.read_csv('/kaggle/input/sentiment-analysis-dataset/train.csv',encoding='ISO-8859-1')

Let me show you how the data looks.

| textID | text | selected_text | sentiment | Time of Tweet | Age of User | Country | Population -2020 | Land Area (Km²) | Density (P/Km²) | |

| 0 | cb774db0d1 | I`d have responded, if I were going | I`d have responded, if I were going | neutral | morning | 0-20 | Afghanistan | 38928346 | 652860.0 | 60 |

| 1 | 549e992a42 | Sooo SAD I will miss you here in San Diego!!! | Sooo SAD | negative | noon | 21-30 | Albania | 2877797 | 27400.0 | 105 |

| 2 | 088c60f138 | my boss is bullying me… | bullying me | negative | night | 31-45 | Algeria | 43851044 | 2381740.0 | 18 |

| 3 | 9642c003ef | what interview! leave me alone | leave me alone | negative | morning | 46-60 | Andorra | 77265 | 470.0 | 164 |

| 4 | 358bd9e861 | Sons of ****, why couldn`t they put them on t… | Sons of ****, | negative | noon | 60-70 | Angola | 32866272 | 1246700.0 | 26 |

data = data[['text', 'sentiment']] _neutral_ = data[(data['sentiment']=='neutral')].index data.drop(_neutral_, inplace=True) data['sentiment'].value_counts()

I removed the “neutral” sentiment wording to allow for better algorithm testing. The following number of data points are present in the data following the aforementioned operation.

positive 8582 negative 7781 Name: sentiment, dtype: int64

Let me give the negative sentiment label a value of “0” and the positive sentiment label a value of “1”. Moreover, data must be preprocessed before being fed to the algorithm. I went through the three preprocessing processes listed below.

It’s crucial to remember that before continuing, your computer must have the ‘nltk’ library installed. With the “pip” command, the “nltk” library can be installed. Also, note that I have used NLTK instead of Spacy here. The reason is, NLTK is popular and I really wanted to give you a different flavor of lemmatization after the first article.

import re

def trim_length(text):

'''

This method removes the repeating characters that are repeated more than 2 times

'''

pat = re.compile(r"(.)\1{2,}")

return pat.sub(r"\1\1", text)

def word_correct(myStr):

'''

This method accepts a string and removes the meta characters from it

'''

myStr = str(myStr)

pat = re.compile(r'[^a-zA-Z1-9]+')

corrected_str = ''

splits = myStr.split()

for word in splits:

word = word.strip()

word = re.sub(pat, '', word).lower()

word = trim_length(word)

corrected_str = corrected_str+word+' '

corrected_str = corrected_str.strip()

return corrected_str

import nltk

from nltk.stem import WordNetLemmatizer

from nltk.corpus import wordnet

nltk.download('punkt')

nltk.download('averaged_perceptron_tagger')

def get_pos(word):

tag = nltk.pos_tag([word])[0][1][0].upper()

tag_dict = {"J": wordnet.ADJ,

"N": wordnet.NOUN,

"V": wordnet.VERB,

"R": wordnet.ADV}

return tag_dict.get(tag, wordnet.NOUN)

def lemmatize_word(myStr):

myStr = str(myStr)

lemmatizer = WordNetLemmatizer()

lst_word = nltk.word_tokenize(myStr)

final_str=''

for word in lst_word:

word = lemmatizer.lemmatize(word, get_pos(word))

final_str = final_str+word+' '

final_str = final_str.strip()

return final_str

data['text'] = data['text'].apply(lambda i:word_correct(i))

data['text'] = data['text'].apply(lambda i: lemmatize_word(i))

Following preprocessing, it’s crucial to look for any newly formed empty strings. If so, such instances must be eliminated. Otherwise, your algorithm might not work as intended or its accuracy might be compromised.

In order to later determine the accuracy of the algorithm’s output, I have also isolated the sentiment score from the text data.

data = data.replace(r'^s*$', float('NaN'), regex=True)

data = data.dropna()

X = data['text']

y = data['sentiment_score']

print(X.isna().sum())

print(y.isna().sum())

Output:

0 0

Now I am ready to feed the data to the VADER algorithm. Let us do that.

import nltk

nltk.download('vader_lexicon')

from nltk.sentiment.vader import SentimentIntensityAnalyzer

analyzer = SentimentIntensityAnalyzer()

def vader_sentiment_result(data):

data = str(data)

scores = analyzer.polarity_scores(data)

if scores["neg"] > scores["pos"]:

return 0

else:

return 1

score_vader = X.apply(lambda i: vader_sentiment_result(i))

score_vader.value_counts()

Output:

1 11073 0 5289

As you can see, a lot more data points have been labeled as positive by the VADER algorithm than the original dataset. When contrasting it with the Flair algorithm, we will evaluate the algorithm’s correctness.

Flair is a state-of-the-art NLP library developed by Zalando Research. It focuses on generating contextual string embeddings for a variety of NLP tasks, including sentiment analysis. Unlike rule-based models such as VDER, Flair uses pre-trained language models to create context-aware embeddings, which can then be fine-tuned for specific tasks. This approach allows Flair to capture more nuanced and complex language patterns.

The key components of Flair are its pre-trained language models and the application of transfer learning and fine-tuning. Pre-trained language models help to capture the contextual information of words within a sentence which provides a solid foundation for various NLP tasks including sentiment analysis. On the other hand, transfer learning allows it to take advantage of knowledge from these pre-trained models. Fine-tuning adapts these models to specific tasks like sentiment analysis. The advantages of Flair are its better contextual understanding, support for multiple languages, and its applicability to a wide range of NLP tasks. All of this makes it a powerful and versatile tool for sentiment analysis.

There are many advantages of Flair for sentiment analysis and other NLP tasks. Its improved contextual understanding, achieved through context-aware embeddings, enables more accurate sentiment detection, especially in complex sentences. Flair’s support for multiple languages makes it viable to perform sentiment analysis for different languages. Additionally, Flair’s applicability extends beyond sentiment analysis to various NLP tasks such as named entity recognition, part-of-speech tagging, and text classification. You might now have an idea why Flair is so popular in industry and academia.

Despite its advantages, Flair also has some limitations. Flair has high complexity which can lead to increased processing times. Therefore Flair is less suitable for real-time applications or large-scale data analysis. Since Flair relies on contextual embeddings rather than a rule-based model, it is less interpretable which can make it challenging to understand the underlying factors contributing to sentiment predictions. Lastly, as I mentioned, Flair heavily depends on the quality and coverage of the pre-trained models so its effectiveness in specific domains or languages is constrained by the availability of suitable pre-trained models.

One of Flair’s biggest features is that it offers pre-trained models that are quite simple to utilize with the Flair libraries. In various categories of natural language processing, Flair has fared better than a wide range of prior models.

Let me show you the Flair sentiment analysis example. With the ‘pip’ command as shown below, you can install Flair if it isn’t already on your computer.

pip install flair

Note that I have already preprocessed the data before feeding it to VADER so I do not need to do it again.

from flair.models import TextClassifier

from flair.data import Sentence

classifier = TextClassifier.load('en-sentiment')

def flair_sentiment_score(data):

data = str(data)

data = Sentence(data)

classifier.predict(data)

if(data.labels[0].to_dict()['value']=='NEGATIVE'):

return 0

else:

return 1

score_flair = X.apply(lambda i:flair_sentiment_score(i))

score_flair.value_counts()

Output:

1 8454 0 7908

You can see that fewer text instances have been identified as positive by flair than by VEDAR.

Both VADER and Flair have their own advantages and limitations. I have listed some of them below.

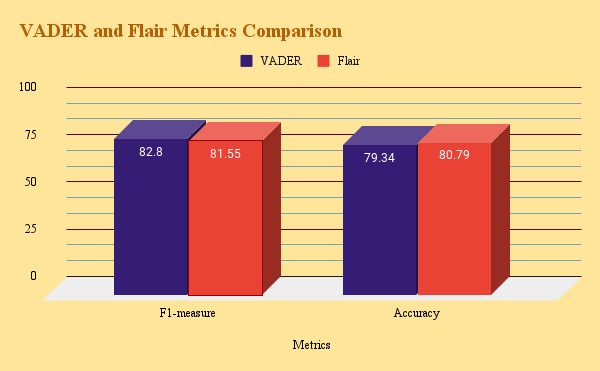

Now these are just talks. Let me show you the actual comparison of VADER and Flair using the F1-measure. I have used the scikit-learn library to calculate both the aforementioned metrics.

from sklearn.metrics import accuracy_score, f1_score

accu_score_vader = accuracy_score(y, score_vader)

print("Accuracy of VADER:", accu_score_vader)

accu_score_flair = accuracy_score(y, score_flair)

print("Accuracy of Flair:", accu_score_flair)

f1_score_vader = f1_score(y, score_vader)

f1_score_flair = f1_score(y, score_flair)

print("F1-score VADER: ", f1_score_vader)

print("F1-score Flair: ", f1_score_flair)

Result:

| Metrics | VEDAR | Flair |

| Accuracy | 79.34% | 80.79% |

| F1-measure | 82.80% | 81.55% |

Both VADER and Flair have many practical applications in various domains. They can provide insights into sentiment trends and can help in making an informed decision.

The choice between VADER and Flair depends on the specific context and requirements of each application. One should also consider computational requirements, language support, and domain-specific factors guiding the decision.

Unsupervised sentiment analysis is a developing field with great potential for applications in the real world. Some promising areas of focus include:

By addressing these challenges and embracing new technological advancements, unsupervised sentiment analysis can continue to evolve, offering increasingly accurate and reliable insights into the complex world of human emotions and opinions.

In conclusion, VADER and Flair each have their strengths and weaknesses, depending on the specific sentiment analysis task at hand. VADER is well-suited for projects with limited computational resources, a focus on social media language, and English text analysis. Flair, while computationally demanding, excels in providing more accurate sentiment predictions for complex and diverse text sources and offers multilingual support. Which one I choose depends on my project requirements.

You can find the code used in this article here.